Can AI predict relapse

Artificial intelligence (AI) is becoming a valuable tool to identify circumstances where psychiatric deterioration can occur. Although relapse is most often associated with addiction and substance abuse, people can relapse from psychological conditions as well.

Summary

- AI enables early relapse detection: By analyzing large volumes of data through machine learning and natural language processing (NLP), AI can detect subtle changes in behavior, mood, or language that may indicate an impending relapse—often before human clinicians notice.

- Tools like Wysa, Youper, and Kintsugi support real-time monitoring: These AI-powered mental health apps offer self-guided CBT, voice analysis, and chatbot interactions that can alert therapists to rising risk factors, enabling timely interventions and more personalized care. Download my free CBT worksheets.

- AI enhances but doesn’t replace clinical judgment: While AI can uncover patterns and provide valuable insights, therapists must interpret this information within a broader clinical context. AI is a tool to support, not substitute, the human therapeutic relationship.

- Ethical considerations are essential: Therapists must address issues like false positives, data privacy, and informed consent when integrating AI into care. Transparency and client education are critical to the responsible use of AI in relapse prevention.

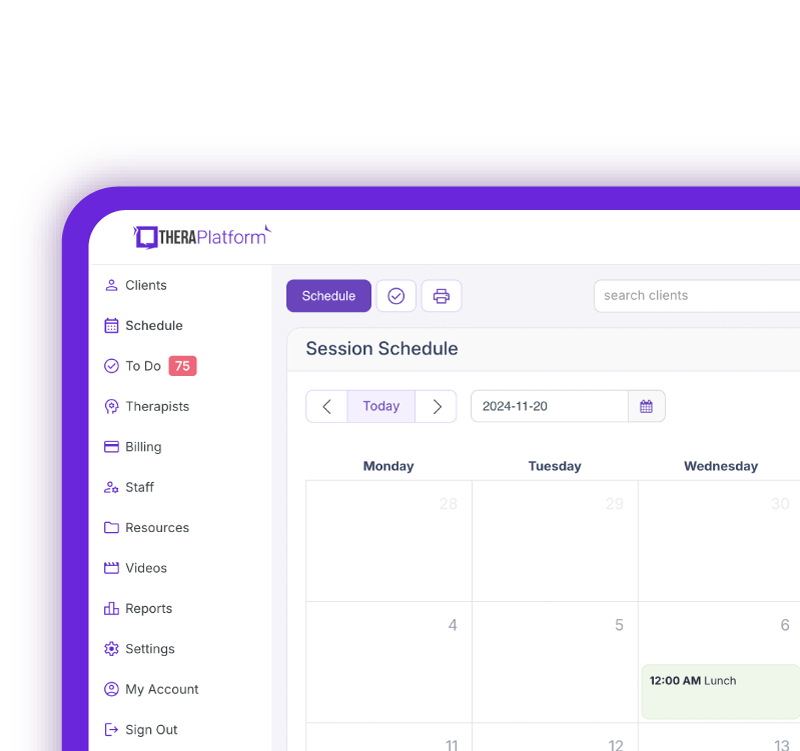

- Using EHR systems with integrated AI notes —such as TheraPlatform—can significantly streamline tasks like documentation.

Streamline your practice with One EHR

- Scheduling

- Flexible notes

- Template library

- Billing & payments

- Insurance claims

- Client portal

- Telehealth

- E-fax

For example, symptoms of mood and anxiety may lie dormant until environmental cues and insufficient coping mechanisms trigger them. Early detection of mental health relapse is a key to treatment success. It is no secret that timely interventions increase the likelihood of positive outcomes.

Here is what you need to know about how AI can predict relapse.

How is AI used to predict relapse?

Artificial Intelligence has taken full advantage of the transition to electronic health records. It can consume large quantities of data and analyze patterns from an objective perspective. The use of AI in predicting mental health relapse generally falls into two camps: Machine learning and natural language processing. Let’s examine how therapists can use each in relapse prediction.

Using Machine Learning (ML) for predicting mental health relapse

Machine learning looks at broad patterns found in a client’s records, including therapy notes and test results. If given access, it can even review smartphone usage, data from wearable devices, and social media activity. It trains on that data and uses algorithms to predict future actions based on the information it has reviewed. It can see subtle changes that a human being might miss.

For example, clinicians can use ML to identify the recurrence of behavior in individuals who have indicated previous psychiatric states. Bipolar disorder is a mental health condition that tends to exhibit cycles of behavior and is a prime candidate for ML analysis. Not surprisingly, ML has been successfully used to predict adverse outcomes related to bipolar disorder.

Natural Language Processing (NLP) for predicting mental health relapses

NLP is technically a subset of machine learning, but it takes it a step further. It can provide a nuanced analysis of an individual’s data by examining language patterns. It is not an exaggeration to say that this represents a revolutionary development in the role of AI in mental health diagnosis and relapse prevention.

What makes NLP special is that, much like a human, it can detect emotional tone and underlying themes. For example, NLP has been effective in detecting linguistic markers associated with psychotic episodes in people with schizophrenia several weeks before relapse occurs.

V6: With the computer - Short EHR

Benefits of early relapse detection with AI

AI can be a valuable aid to psychotherapists in preventing relapse.

Here are some of the primary benefits:

- Proactive interventions: Because AI programs can have daily interactions with clients, they are often the first to know when problems occur, allowing therapists to intervene before symptoms worsen. In turn, this can prevent crises and hospitalizations.

- Treatment plan adjustments: Therapists may feel like they are fully informed about their clients, but sometimes the concerns discussed in session are not the only problems that exist. By consuming multiple sources of ongoing information, AI can identify unforeseen difficulties that may arise and enable therapists to make informed adjustments to treatment plan goals and objectives. This allows therapy to focus on relevant concerns that could quickly escalate if left untreated.

- Enhances therapist awareness: Therapists are human beings. They can only consider so much information at once. AI can synthesize vast amounts of data and point out symptoms and patterns that may be cause for concern. This added awareness gives therapists time to formulate optimal interventions and improve their day-to-day decision-making.

Real-world applications

Currently, most AI resources are utilized for administrative tasks, such as note-taking and scheduling. However, AI chatbots that provide support and basic guidance are gaining in popularity.

Many of these tools use ML and NLP to act as “coaches” for people experiencing mental health difficulties. Some even steer clients through the steps of cognitive-behavioral therapy (CBT).

Let’s take a look at several applications that providers can use to assist with relapse prevention.

- Wysa: Wysa is a mental health self-help app with numerous features that complement therapy and support. It uses an AI chatbot to assign relevant CBT exercises and detects variables that may indicate a client is in crisis. It also features a “co-pilot” mode, specifically designed for use in conjunction with a therapist. Its use has been associated with decreases in anxiety and depression.

- Youper: Youper is another popular 24/7 CBT self-help app that utilizes AI to target mental health problems. Its assessment function can identify relevant symptoms and prevent relapse by guiding clients through interventions aimed at reducing depression and anxiety. Unlike Wysa, it does not have a feature that explicitly pairs with a therapist, but clinicians can easily use it to help monitor a client’s status.

- Kintsugi: Kintsugi demonstrates how AI can be utilized to analyze voice biomarkers indicative of mental health challenges. Kintsugi examines the vocal patterns obtained from the client’s voice journaling to identify specific psychiatric conditions. As such, therapists can utilize Kintsugi as a screening measure to aid in diagnosing problems and preventing relapse. For example, voice biomarker outcomes have been compared with results from the Patient Health Questionnaire-9 (PHQ-9) and found to predict moderate to severe depression.

Free Resources for Therapists

Click below and help yourself to peer-created resources:

Generative AI for relapse prediction

Generative AI is on the cutting edge of artificial intelligence. It doesn’t just examine information; it “learns” on its own and can create original content.

Popular applications such as ChatGPT and Gemini are examples of this form. Although many of these apps are not specifically designed for mental health, generative AI is going to play a prominent role in preventing relapse as we go forward.

ChatGPT-4, for instance, has been able to estimate the likelihood of suicide attempts with similar accuracy to mental health professionals. What’s more, in terms of recognizing suicidal ideation, ChatGPT-4 appears to be more precise than humans.

What therapists need to consider before using AI for relapse prediction

Although AI can potentially contribute significantly to mental health treatment, it also has its limitations. Therapists must consider the following when using AI for relapse prevention:

False positives/negatives: Anyone who uses AI knows that it is only as accurate as the information it is fed to use in its algorithms. There can be a lot of false and misleading information on the internet and in electronic records. Additionally, AI assessment tools often rely on client self-report, which may be biased and simply untrue. As a result, therapists need to use caution when using AI: Just because AI says that a client may be at risk for relapse doesn’t make it so.

Privacy and data security issues: Whenever you utilize technology, you must be aware of privacy and security issues. Clients are frequently talking to AI chatbots about highly personal matters, and therapists must make sure this information does not find its way to unwarranted individuals. While most AI tools tout privacy features, not all are strictly HIPAA-compliant. Therapists are advised to review the privacy aspects of each app they recommend to clients to ensure confidentiality.

Ensuring client consent and transparency: Therapists must inform clients of both the benefits and risks associated with AI. Consequently, therapists need to exhibit competence in understanding how AI tools work. This means they will need to receive ongoing education to stay current on recent developments. Additionally, clients need to be informed about how clinicians will utilize AI in their specific treatment and given the right to opt out of its use. Accordingly, providers must update their consent forms to reflect the use of AI in an individual’s treatment.

AI can complement clinical judgment – not replace it

With its recent advancements, it may be tempting for therapists to use AI to automate their work. However, AI is not a replacement for therapists. Instead, it is a tool that can provide information to assist a therapist’s decision-making and make life easier. The therapeutic relationship is vital to the success of therapy, and AI cannot replace the empathy and emotion that are present in that collaboration.

AI exhibits immense potential to aid therapists in detecting early relapse. Its tools can help identify a client’s internal processes as well as environmental triggers that may precede psychiatric deterioration.

While it cannot replace a therapist’s clinical judgment, it can assist counselors in recognizing the psychological factors that lead to relapse. As AI evolves, therapists must receive ongoing training to stay current with emerging mental health resources and effectively incorporate these tools into their clinical practices.

Streamline your practice with One EHR

- Scheduling

- Flexible notes

- Template library

- Billing & payments

- Insurance claims

- Client portal

- Telehealth

- E-fax

Resources

Theraplatform is an all-in-one EHR, practice management and teletherapy solution with AI-powered note taking features that allows you to focus more on patient care. With a 30-day free trial, you have the opportunity to experience Theraplatform for yourself with no credit card required. Cancel anytime. They also support different industries including mental and behavioral health therapists in group practices and solo practices.

More resources

- Therapy resources and worksheets

- Therapy private practice courses

- Ultimate teletherapy ebook

- The Ultimate Insurance Billing Guide for Therapists

- The Ultimate Guide to Starting a Private Therapy Practice

- Mental health credentialing

- Insurance billing 101

- Practice management tools

- Behavioral Health tools

Free video classes

- Free on-demand insurance billing for therapist course

- Free mini video lessons to enhance your private practice

- 9 Admin tasks to automate in your private practice

References

Amanollahi, M., Jameie, M., Looha, M. A., A Basti, F., Cattarinussi, G., Moghaddam, H. S., Di Camillo, F., Akhondzadeh, S., Pigoni, A., Sambataro, F., Brambilla, P., & Delvecchio, G. (2024). Machine learning applied to the prediction of relapse, hospitalization, and suicide in bipolar disorder using neuroimaging and clinical data: A systematic review. Journal of Affective Disorders, 361, 778–797. https://doi.org/10.1016/j.jad.2024.06.061

Leo, A. J., Schuelke, M. J., Hunt, D. M., Miller, J. P., Areán, P. A., & Cheng, A. L. (2022). Digital Mental Health Intervention Plus Usual Care Compared With Usual Care Only and Usual Care Plus In-Person Psychological Counseling for Orthopedic Patients With Symptoms of Depression or Anxiety: Cohort Study. JMIR formative research, 6(5), e36203. https://doi.org/10.2196/36203

Le Glaz, A., Haralambous, Y., Kim-Dufor, D. H., Lenca, P., Billot, R., Ryan, T. C., Marsh, J., DeVylder, J., Walter, M., Berrouiguet, S., & Lemey, C. (2021). Machine learning and natural language processing in mental health: Systematic review. Journal of Medical Internet research, 23(5), e15708. https://doi.org/10.2196/15708

Levkovich, I., & Elyoseph, Z. (2023). Suicide Risk Assessments Through the Eyes of ChatGPT-3.5 Versus ChatGPT-4: Vignette Study. JMIR mental health, 10, e51232. https://doi.org/10.2196/51232

Mazur, A., Costantino, H., Tom, P., Wilson, M. P., & Thompson, R. G. (2025). Evaluation of an AI-Based Voice Biomarker Tool to Detect Signals Consistent With Moderate to Severe Depression. Annals of family medicine, 23(1), 60–65. https://doi.org/10.1370/afm.240091

Mehta, A., Niles, A. N., Vargas, J. H., Marafon, T., Couto, D. D., & Gross, J. J. (2021). Acceptability and Effectiveness of Artificial Intelligence Therapy for Anxiety and Depression (Youper): Longitudinal Observational Study. Journal of medical Internet research, 23(6), e26771. https://doi.org/10.2196/26771

Olawade, D. B., Wada, O. Z., Odetayo, A., David-Olawade, A. C., Asaolu, F., and Eberhardt, J. 2024. Enhancing mental health with Artificial Intelligence: Current trends and prospects. Journal of Medicine, Surgery, and Public Health. 3 (Art. 100099). https://doi.org/10.1016/j.glmedi.2024.100099

Pennsylvania Psychiatric Institute. The power of early intervention in mental health: A pathway to wellness and recovery. https://ppimhs.org/newspost/the-power-of-early-intervention-in-mental-health-a-pathway-to-wellness-and-recovery/

Zafar, F., Fakhare Alam, L., Vivas, R. R., Wang, J., Whei, S. J., Mehmood, S., Sadeghzadegan, A., Lakkimsetti, M., & Nazir, Z. (2024). The role of artificial intelligence in identifying depression and anxiety: A comprehensive literature review. Cureus, 16(3), e56472. https://doi.org/10.7759/cureus.56472

Zaher, F., Diallo, M., Achim, A. M., Joober, R., Roy, M. A., Demers, M. F., Subramanian, P., Lavigne, K. M., Lepage, M., Gonzalez, D., Zeljkovic, I., Davis, K., Mackinley, M., Sabesan, P., Lal, S., Voppel, A., & Palaniyappan, L. (2024). Speech markers to predict and prevent recurrent episodes of psychosis: A narrative overview and emerging opportunities. Schizophrenia research, 266, 205–215. https://doi.org/10.1016/j.schres.2024.02.036